Message from the Chief

It will come as no surprise to you that innovation, data and technology are an integral part of who we are and what we do here at the Communications Security Establishment Canada. When I meet with people from across our organization, whether they are cryptanalysts, linguists, data scientists or systems architects, I am struck by their commitment to our mission and their creativity in solving some of the hardest problems we face.

Emerging and disruptive technologies like artificial intelligence (AI) are not new to us. In fact, machine learning (ML) and data science tools and techniques developed in-house, right here at CSE, have long shaped the advancement of AI / ML within the security and intelligence community and society more broadly. The compelling need to leverage AI to provide better, faster insights about the threat landscape is clear: we simply cannot afford to wait or we will be left behind. AI will not change what we do, but it will be a vital tool among many in our cyber security and foreign signals intelligence toolboxes.

Of course, as with any major technological advancement, AI is a double-edged sword. It can change lives for the better and it will. At the same time, threat actors will harness this technology for their ends, amplifying the impact of existing threats to security such as malware, ransomware and social engineering, among others.

This AI Strategy will ensure we are fully ready to understand and respond to a rapidly changing threat landscape that will be increasingly driven by AI -equipped malicious actors. Central to this AI strategy is the same “secret sauce” that makes CSE a world-class security and intelligence organization: our extraordinary people, cutting-edge technology and wide-ranging partnerships.

I am challenging all CSE employees to participate in this effort by building your AI literacy, actively participating in debate and discussion, and by innovating and experimenting in a safe, secure and responsible way. We will always be thoughtful and rule-bound in our adoption of AI , keeping responsibility and accountability at the core of how we will achieve our goals. Recognizing that these technologies are fallible, we will experiment and scale incrementally, with a focus on rigorous testing and evaluation, keeping our highly trained and expert humans-in-the-loop.

I look forward to working with you all to support this unified AI effort and to learning and evolving as we go.

Your Chief,

Caroline Xavier (she/elle)

Executive summary

The Communications Security Establishment Canada’s (CSE) expertise has evolved over the past eight decades in lockstep with technology’s advance. Today, CSE is at the forefront of the next generation of communications and digital technologies, including the use of AI and ML . We are enthusiastic about the vast benefits that AI brings and will leverage the technology to help safeguard Canada’s national security, economic prosperity, democratic values and the safety of Canadians.

CSE is going through a period of significant growth and change as our threat environment evolves and demand for our products and services increases. While AI / ML are not new to the work we do, the field of AI is changing rapidly, and we must adapt with it. The CSE AI Strategy will be the first of its kind specifically focused on AI / ML technologies as they apply to CSE’s mission. It is a foundational document that sets out key directions and goals for being an AI -enabled organization in the face of incredibly rapid technological change. The strategy will help set our priorities, guide our actions and anchor our efforts to refine and adapt our governance, policies and processes.

As CSE increases its AI readiness through one unified AI effort, we are committed to:

- developing new capabilities to solve critical problems through innovative use of AI and ML technologies

- championing responsible and secure AI through a period of rapid technological innovation

- countering the threats posed by AI -enabled adversaries to CSE and to Canadians

The three commitments described above will be supported by our four AI cornerstones:

- People: enabling humans, not replacing them, with AI

- Partnerships: partnering with industry, academia, the security and intelligence community and the wider Government of Canada community to multiply our capabilities.

- Ethics: empowering safe, secure, trustworthy and ethical AI practices

- Infrastructure: implementing effective AI governance and supportive infrastructure, such as modernizing our high-performance computing and data infrastructure

Introduction

CSE is Canada’s cryptologic agency, responsible for foreign signals intelligence, cyber security, and foreign cyber operations. CSE includes the Canadian Centre for Cyber Security (Cyber Centre), which is the federal government’s operational and technical lead for cyber security. The Cyber Centre is the single unified source of expert advice, guidance, services and support on cyber security for government, critical infrastructure owners and operations, the private sector and the Canadian public.

CSE’s mandate is detailed in the Communications Security Establishment Act (CSE Act) and has five parts:

- foreign intelligence

- cyber security

- active cyber operations

- defensive cyber operations

- technical and operational assistance to federal partners

In addition, as part of Canada’s national security community, CSE is subject to external review by the National Security and Intelligence Review Agency and the National Security and Intelligence Committee of Parliamentarians. The Intelligence Commissioner also performs an independent, quasi-judicial oversight function related to foreign intelligence and cyber security activities.

CSE is part of the Five Eyes, the world’s longest-standing and closest intelligence-sharing alliance. The Five Eyes includes the signals intelligence and cyber security agencies of Canada, Australia, the United Kingdom, the United States and New Zealand.

CSE is also part of a much broader ecosystem that encompasses the Canadian security and intelligence community, the Government of Canada, the defence community, other allied nations, as well as industry and academic partners. Looking ahead to key priorities such as delivering on government defence commitments, including the planned creation of a joint Canadian cyber operations capability, these partnerships will become even more integral to our success. Partnerships will also help us drive industry best practices, seize technical advantages, and broaden collaboration for solving common challenges.

Purpose of CSE’s AI strategy

AI is a broad discipline of computer science that focuses on technologies demonstrating behaviours normally associated with human intelligence, such as learning, reasoning and problem-solving. While AI and ML are not new to the work we do at CSE, the field of AI is changing rapidly, and our organization must adapt with it.

The CSE AI Strategy is a foundational document that sets out key directions and goals for being an AI -enabled organization in the face of incredibly rapid technological change. The strategy will outline our goals, guide our actions, and anchor our efforts to refine and adapt our governance, policies and processes to this continuously evolving AI and data landscape. It will involve innovating and integrating AI capabilities in line with our mandate, championing responsible and secure AI , and countering the threat posed by malicious use of these technologies. The strategy will complement federal government AI policies and guidelines.

CSE’s “3+4+1” AI Strategy Framework:Three commitments, four cornerstones, one unified AI effort

In leveraging AI / ML to help safeguard Canada’s national security, economic prosperity, democratic values and the safety of Canadians, CSE has identified the three commitments that define our purpose, as well as four cornerstones that will ensure we have the foundation necessary to succeed in these efforts. Finally, one unified AI effort will guide CSE actions as they relate to this strategy.

It is crucial for Canada’s security that CSE advances its AI capabilities and expertise with an enterprise-wide, forward-looking vision, and with a guiding objective that people across CSE are empowered to use AI and are prepared to do so responsibly.

Long description - CSE’s “3+4+1” AI Strategy Framework

This image present the strategic vision built around harnessing the power of artificial intelligence (AI) while ensuring its responsible use.

It begins with three commitments, acting as guiding principles:

- Innovating and integrating AI for national security.

- Championing responsible and secure AI .

- Understanding and countering the threat of malicious AI use

To support these commitments, the framework is built on four cornerstones, representing the foundation of success:

- People: Skilled individuals are at the heart of this effort, bringing expertise, creativity, and adaptability.

- Partnerships: Collaboration with stakeholders, whether within the organization or across industries and governments, is essential for progress.

- Ethics: A commitment to ethical practices ensures trust, transparency, and fairness in AI applications.

- Infrastructure: Robust systems and resources are necessary to enable the seamless integration and effective functioning of AI technologies.

All these components converge into a unified effort: a vision of an organization committed to evolving and adapting to remain ready for the opportunities and challenges posed by AI .

The evolving landscape

CSE has a long history of leading the way in developing, adapting and applying cutting-edge technologies to achieve its mandate of keeping Canada safe and secure. At the core of CSE’s mission is data science. We use it daily to turn massive quantities of data into actionable intelligence-based advice, ensuring that leaders in the Government of Canada have a strategic decision-making advantage.

AI is a key enabler for data science. In recent years, a subfield of AI called machine learning (ML), in which algorithms "learn from data", has become an essential tool in the data science arsenal. Using ML , computers may solve problems without a user needing to explicitly program a step-by-step solution. This is essential when the datasets being analyzed are too big for a programmer to be able to look at all the data directly—a phenomenon known as “big data.”

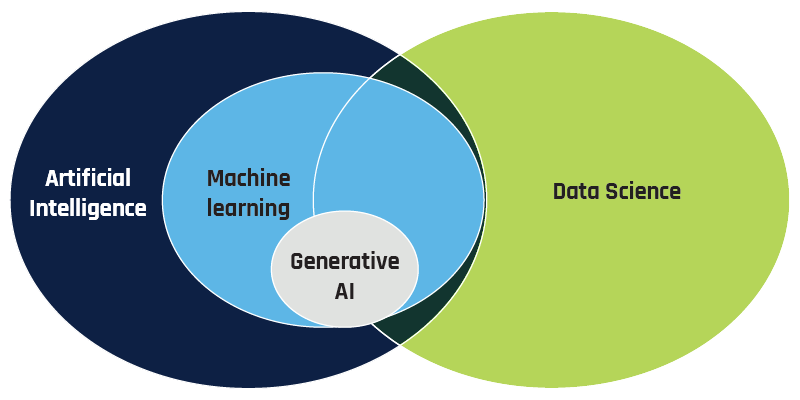

Recently, a sub-branch of ML called generative AI has taken the world by storm (see the callout on large language models). Generative AI technologies are algorithms that generate new content (text, images, audio, video or software code) that traditionally only humans could produce. Advances in generative AI have led to many recent breakthroughs in foundational ML techniques, offering a great advantage to data scientists, including those at CSE. Progress in generative AI has proceeded at an incredible pace. Many people now use the terms “ AI ” and “generative AI ” interchangeably, although generative AI is a small subset of techniques from ML .

Long description - CSE’s “3+4+1” AI Strategy Framework

The Venn diagram shows the overlap between these fields:

- Data Science: Data Science is an interdisciplinary academic field that uses statistics, scientific computing, and visualization to extract knowledge from large sets of data. It overlaps with all the other fields.

- Artificial Intelligence (AI): AI is a key enabler for data science and involves the development of programs that demonstrate behaviours normally associated with human intelligence, such as learning and problem solving. It overlaps with Data Science, and fully encompasses Machine Learning and Generative AI .

- Machine Learning (ML): ML is a subfield of AI in which algorithms learn how to complete a task from given data without a user needing to explicitly program a step-by-step solution. It is located entirely within AI , overlaps significantly with Data Science, and fully encompasses Generative AI .

- Generative AI : A subfield of ML , Generative AI algorithms produce new content (text, images, audio, video, or software code) that traditionally only humans could produce. It is located entirely within ML and overlaps with Data Science.

In summary, this diagram shows the relationships among these fields, with AI and Data Science overlapping through ML , and Generative AI as a specialized form of ML .

Large language models (LLMs)

LLM are a type of generative AI used to produce humanlike text on a given topic from user prompts. OpenAI’s ChatGPT and Google’s Gemini are well-known examples of LLM . General-purpose LLM are trained on incredibly large collections of text. This dataset could include entire copies of the Internet, virtually every book ever written, every piece of open-source software ever created and transcripts from all video media online.

Using this comprehensive set of training material, LLM develop extremely sophisticated models of human language and can produce plausible-sounding text for almost any query. Unfortunately, LLM are not infallible. They are not designed to be capable of reasoning, have no concept of numeracy and often make up facts. LLM-generated misinformation is a significant enough problem that members of the AI community have given it a name: "hallucination" or "confabulation.”

Understanding CSE’s current posture towards AI

CSE has been using AI to strengthen our intelligence collection and analysis and protect Canada from cyber threats. For example, AI / ML technologies:

- assist linguists in translating foreign signals information received in hundreds of languages to English and French

- strengthen the cyber defence posture of critical Canadian networks

- automate and enable malware detection tools that ensure a rapid response to cyber threats (see the case study on AI for cyber defence)

CSE is not merely a consumer of AI / ML technologies, but a producer as well. Taking advantage of our unique resources, including our supercomputers, and specialized expertise, CSE regularly makes contributions to the broader AI / ML and data science community (see Data science success story—Uniform Manifold Approximation and Projection). CSE uses foundational research to solve operational challenges and build innovative partnerships, such as the Natural Sciences and Engineering Research Council of Canada-CSE Research Communities grants, which also bolsters the Canadian research ecosystem.Footnote 1

Traditionally, CSE used special tools and techniques—often homegrown tools—to analyze its increasingly large datasets, but this is beginning to change. As problems involving big data become increasingly mainstream, CSE can use off-the-shelf innovations and partner with industry and academia to improve its ability to achieve its mission.

AI for cyber defence

CSE uses AI / ML to assist in cyber defence. The Cyber Centre works to defend federal and critical infrastructure systems from cyber threats. This involves detecting patterns in vast quantities of data. Machine learning helps enable the detection of a variety of threats to keep these systems and networks safe.

Malware classification

Another important example of AI use at CSE is in malware classification. Machine learning-enabled malware classification is crucial for CSE because it enables the detection and analysis of sophisticated cyber threats that standard antivirus solutions may not catch. Government of Canada networks are often the targets of attacks by nation-state adversaries using custom malware, which requires more advanced detection capabilities than what off-the-shelf antivirus software can provide.

CSE data scientists have developed a novel malware classification capability that can detect previously unseen malware on government networks. Once the tool detects such malware, it quarantines it and sets it aside for later analysis. As it is impractical to analyze every piece of suspected malware manually, CSE also uses off-the-shelf antivirus tools. In cases where we believe that our AI spots malware before the antivirus software does, we can flag these examples for re-evaluation. If in the future the antivirus software starts categorizing the file as malware, we know that our prediction was right, and we can fine-tune our model accordingly.

Data science success story—Uniform Manifold Approximation and Projection (UMAP)

UMAP is a CSE-developed algorithm that leverages advanced mathematics in an efficient way to extract the salient features from complex data. This has many applications, including in visualization where providing a picture of large and complex data allows a human to understand data by making visual associations

UMAP first learns the structure of data in high-dimensional space—called a manifold—and then finds a low-dimensional representation of that same manifold permitting a grouping of data that meets with human intuition. The strong mathematical foundations ensure a robust and interpretable algorithm that can be applied to a broad range of problems in unsupervised learning.

The broad success of UMAP lies in CSE’s philosophy of, wherever possible, addressing foundational techniques in data science instead of tackling specific problems. UMAP’s first use case was in analyzing malware. However, it spread quickly through the data science community due to its wide applicability to datasets of many types and its availability through the open-source community. In 2020, for example, epidemiologists began to harness UMAP to analyze the vast quantities of complex data related to the COVID-19 pandemic. UMAP has even made it to New York’s Museum of Modern Art (MoMA), as part of a global AI painting called Unsupervised—Data Universe—MoMA.

Since the initial code release in 2018, UMAP has been downloaded more than 40 million times and has been cited in over 13,000 studies ranging from machine learning to astrophysics. The success story of UMAP demonstrates how CSE can leverage its unique expertise in ML / AI to contribute to Canada’s scientific ecosystem.

AI threat overview

AI will provide advantages in the cyber security, foreign affairs and defence landscape. In the coming years, AI / ML capabilities will be increasingly vital to CSE’s ability to protect Canada and Canadians. Notably, if deployed safely, securely and effectively, these capabilities will improve CSE’s ability to analyze larger amounts of data faster and with more precision. This will improve the quality and speed of decision-making and responses to a range of threats.

However, these powerful technologies are imperfect and pose threats to intelligence collection and cyber defence activities. AI also presents new risks to Canada, its people and its institutions. In the hands of adversaries, AI may serve to automate and amplify existing threats.

Some of the greatest threats CSE is anticipating derive from the potential dangers or harmful impacts that arise due to the capabilities and misuse of AI in the hands of state or non-state actors.

These threats include:

- algorithms that enable the spread of sophisticated disinformation at significant speed and scale, including fake content or “deepfakes” that can be difficult to distinguish from authentic content

- widespread enablement of automated phishing and fraud schemes and social engineering attacks

- advanced and autonomous malware systems, particularly targeted at critical infrastructure

- enhanced kinetic tracking and weapon targeting systems

- data-based attacks, such as prompt injection, where model behaviours can be partially controlled by the data they are processing

- model poisoning attacks, where models are conditioned to give erroneous output when specific circumstances are triggered

- erosion of privacy and greater difficulty protecting sensitive research and trade information

Even outside of adversarial hands, AI technologies can also pose threats to the security, integrity or functionality of CSE systems, including:

- user error arising from inaccurate output from models, a lack of training and overconfidence in the technology

- misinformation as generative AI technologies produce outputs largely indistinguishable from human-generated ones

- challenges in providing transparency or explainability for AI model outputs

- data recoverability attacks, where potentially sensitive training data can be extracted or inferred from the models themselves

CSE is committed to keeping considerations of fairness, transparency, accountability and empowerment at the forefront as we use AI / ML .

AI safety vs. AI security

AI security addresses the potential risks associated with compromised AI systems. AI safety is concerned with ensuring that AI systems and models operate in the manner originally envisioned by their developers without resulting in any unintended consequence or harm. In the CSE context, ensuring both the safety and security of AI systems is important to achieving our mission. These challenges necessitate a whole-of-CSE approach, as well as collaboration with our defence and intelligence partners to bolster our understanding of how AI is shaping threats to national security and cyber defence. In parallel, we must consider AI safety and security at all stages of the research to production lifecycle so that we can anticipate risks and manage them appropriately.

Future challenges of AI

In addition to the threats posed by using AI , CSE anticipates that new AI / ML technologies will impact our operational context in significant ways, though it is difficult to predict their precise extent or impact. Examples include:

- vendors incorporating AI into products leading to unvetted use of AI technologies or unintentional data leakage to third parties

- a lack of transparency by industry regarding AI model training and development, impeding accountability and explainability for users

- staffing challenges as demand for data science specializations increases, making it difficult to hire and retain skilled staff

- access to the computational power needed to operate modern AI models becoming increasingly challenging and resource-intensive to purchase, build and maintain, potentially leading to significant environmental impacts

CSE must also be mindful that as the government and industry increasingly adopt AI technologies, robust cyber security measures become essential. Therefore, we must take on a leading role to ensure that we remain at the forefront of AI technical advancement. Some of the ways we can do this is by improving the accuracy and efficiency of our cyber security tools and investing in our ability to project factual knowledge-based advice and guidance on AI .

CSE’s “3+4+1” AI Strategy Framework: Three commitments

Harnessing state-of-the-art AI / ML technology to deliver on CSE’s mandate will involve three key commitments:

- Innovating and integrating AI for national security: We will leverage AI to deliver trusted intelligence and leading-edge cyber security to our federal and provincial partners on matters concerning Canada’s national security

- Championing responsible and secure AI : We will contribute our expertise to champion responsible and secure AI development, deployment and use across the Government of Canada

- Understanding and countering the threat of malicious AI use: We will gain insight into the ways AI can compromise Canada’s security, and we will hone our tradecraft to counter the threats posed by AI -enabled adversaries to Canadians

Innovating and integrating AI for national security

It is imperative that CSE pursues a technological advantage given the rapid advancement in AI . At CSE, this means developing innovative access approaches and enhancing existing methodologies to improve collection programs, as well as data triage, search and analysis functions.

We must also develop new methods to extract insights from this data faster and on a greater scale. Finally, we must incrementally scale AI use by safely experimenting, testing and evaluating models and their applications, both by developing in-house tools and through partnerships with Government of Canada experts, academia and industry.

As CSE continues to incorporate AI across the organization, we will take care to evolve our guidance, policies and procedures consistently to uphold rigorous standards and reflect emerging best practices. We must prioritize AI . Not doing so will delay mission priorities, hamper data analysis and potentially cede ground to adversaries.

CSE’s key goals related to increasing the innovating and integrating AI include:

- developing multi-mission capabilities to aid in triaging, searching and analyzing content faster

- providing new insights and extracting knowledge faster and at a greater scale, including promising applications of generative AI

- exploring how we can leverage AI to support foreign cyber operations, in close collaboration with the Department of National Defence, Global Affairs Canada and other partners we offer assistance under the CSE Act

- determining how AI can improve resilience to cyber incidents and strengthen cyber defence of systems of importance to the Government of Canada, including critical infrastructure

- leveraging foundational research expertise so that CSE remains at the forefront on new threats and new capabilities

- exploring commercial AI offerings, but continuing to design and build custom AI / ML tools when in-house options are best suited to the task

- identifying applications to accelerate routine business procedures using automation

Championing responsible and secure AI

CSE operates within rigorous legal and policy frameworks and is subject to oversight and external review. We are also bound by international law in our operations and have a proven record of upholding international law and cyber norms. In keeping with these high legal and ethical standards, we are committed to building and using AI in an ethical and responsible manner while working to protect Canada from AI -enabled security threats.

AI comes with known and unknown risks and limitations. To govern and develop CSE’s use of these powerful tools, we will continue to evolve our practices to enable responsible and secure use of AI . As part of this process, we will draw on diverse perspectives and expertise from partners, academia and industry. We will commit to continuous improvement, with accountability as a key guiding principle.

As part of a new Responsible AI Toolkit at CSE, we will evolve our governance and risk management approaches to:

- respond to AI ’s unique considerations

- promote accountability

- fully equip users and decision-makers with the information they need to maximize organizational benefits while mitigating risks

Responsible AI also means developing and using AI securely. As the technical authority for cyber security and information assurance in the Government of Canada and a trusted advisor, CSE has a key role to play in championing the development of secure AI systems as part of comprehensive cyber security, economic security, political security and research security measures.

CSE will build and maintain partnerships with industry and private sector leaders to strengthen the security of their systems and learn from their expertise in the field. We must also continue to provide advice and guidance to support partners and the adoption of best practices in domestic AI systems used by people in Canada, industry, government and CSE.

CSE’s key goals related to championing responsible and secure AI include:

- developing CSE’s Responsible AI Toolkit

- the toolkit will build on risk management practices and a compliance-focused culture

- it will promote fairness, empowerment, accountability and transparency in our AI use

- implementing an AI risk management process

- a new risk management framework will support this work

- this comprehensive assessment of AI techniques and capabilities will promote accountable decision-making

- establishing CSE’s reputation as a centre of expertise for secure AI in Canada

- CSE will position cyber security as a critical aspect of safe and secure AI development

- the Cyber Centre will provide advice and guidance on cyber security empowered by AI , while leveraging our extended network of trusted partners

Understanding and countering the threat of malicious AI use

AI is a force multiplier, meaning it will significantly enhance CSE’s capabilities but also those of our adversaries. CSE’s ability to support national security objectives and keep Canada safe will hinge on our ability to understand the evolving threat landscape.

CSE is committed to continuing to work with the defence, security and intelligence community, and with our allied partners to bring technological advantage in carrying out our missions, amidst a rapidly changing threat environment. Overcoming AI -related challenges will take sustained effort across the government and across society.

CSE’s key goals related to countering the threat posed by AI include:

- creating products, services and techniques that secure AI systems and defend against threats amplified by AI

- sharing AI -relevant intelligence among partners to deepen expertise of how AI is accelerating existing threats (e.g. misinformation) or leading to emerging risks

- building capabilities that reduce AI advantage in the hands of malicious actors, where it is undermining security and safety

- continuing to conduct and consider expanding foundational AI research to ensure scientific advantage over adversaries

CSE’s “3+4+1” AI Strategy Framework: Four cornerstones

There are four cornerstones of AI enablement that underpin our three commitments: people, partnerships, ethics and infrastructure. These foundational elements support our on-the-ground efforts to implement changes at CSE, starting in the short term and preparing for longer-term developments.

People

Supporting CSE’s prized workforce is crucial to maintaining resilience in the face of these technological developments. By increasing employee access to AI capabilities, CSE aims to empower staff to be more effective, especially across our signals intelligence, cyber defence, enabling and research functions. We will provide opportunities for employees to build AI literacy and responsibly apply these technologies, as AI becomes increasingly integral to our day-to-day work.

It is also critical that CSE’s staff maintain accountability and oversight over every aspect of our operations. AI is a supportive tool and CSE’s employees must be able to explain and interpret its outputs. Regardless of the specific techniques and capabilities employed, retaining human oversight during the decision-making process—referred to as human-in-the-loop—will be a key principle and a key responsibility guiding our development and use of AI .

Talent and training

Enhancing CSE’s workforce falls into two streams: expanding our technical expertise in data science and AI / ML and building organizational-wide opportunities to understand and employ the technology these experts help to develop.

CSE’s key goals will focus on talent and training, as well as developing clear operating procedures to support responsible AI use. These goals include:

- supporting the continued advancement of employees’ AI skills through core training and professional development

- developing specialized AI expertise by building a stronger data science community at CSE, including attracting talent with experience in AI ethics and governance

- recruiting more individuals with strategic and socio-technical skills to support the integration of AI across CSE and the wider Government of Canada

- leveraging CSE’s data science expertise to design and build custom AI / ML tools, and growing organizational AI capacity

- increasing access to technical infrastructure, data and advanced tools for all who require it

- setting up best-in-class partnerships with researchers with the Five Eyes, academia, AI Safety Institutes, government departments and research funding agencies

- instilling an AI organizational culture consistent with ONE CSE’s principles of inclusion, innovation and adaptability

Human-in-the-loop

CSE’s use of AI will “augment the human work,” enabling subject matter experts to perform their work efficiently and effectively. Efforts will focus on discovering and highlighting information relevant to our mission and mandate, as well as using AI to support—not replace—human decision-making.

One of CSE’s most effective controls in ensuring our work is lawful, responsible and ethical is to keep humans in the loop when deploying AI . Humans must be accountable for the results of AI systems and have ultimate control over the outcomes. To support this accountability, and wherever appropriate, a human-in-the-loop who is a subject matter expert must remain responsible for the AI -enabled outputs used to support decision-making.

AI for data enrichment

Data enrichment is the process of enhancing existing datasets with additional information or useful features. This can improve the performance of models by enhancing the quality (or quantity) of data. Enrichment can also make data more accessible for human analysts or other AI / ML tools.

Machine translation for intelligence analysis

One example of how CSE uses ML -enabled data enrichment is machine translation. Using this capability, foreign language text can be translated automatically into an analyst’s native language, allowing for the rapid and accurate analysis of data that would otherwise be inaccessible for browsing or querying. CSE has developed machine translation models for many languages that are highly competitive with, and in many cases outperforming, similar models from world-leading AI research labs.

It is important to note that machine translation serves a triage function at CSE, improving the discoverability and accessibility of data by human analysis. As an important safety measure—and in line with the human-in-the-loop principle—these translations are first vetted by CSE linguists before they are used for decision-making or reporting purposes, ensuring the highest level of accuracy and reliability in critical situations.

Partnerships

CSE’s Five Eyes connections remain crucial and to keep up with technological developments, we must build trusted partnerships with industry and academia. As data science, information management and ML become integral to maintaining effective operations, we must recognize that technology companies and academic researchers have certain advantages that we may be unable to match on our own.

CSE will strive to increase partnerships with organizations that have areas of expertise different from our own. We can leverage our advantages, such as our classified environment and highly skilled employees, to offer valuable exchanges with partners at the forefront of technological development.

We will aim to:

- leverage partnerships with industry, academia, and the security and intelligence community to tap into new technological insights

- identify and advance joint priorities across the Five Eyes, especially areas for which CSE can make significant contributions

- strengthen existing relationships with private sector AI industry leaders in Canada

- open doors for wider engagement between CSE, industry partners and AI -focused research institutes; for example, by engaging the National Research Council, the Canadian AI Safety Institute and others who are building capacity for testing and evaluating AI model safety

- pursue partnerships with those who are guiding the development of AI technology in the private sector and in the security and intelligence community

Ethics

The CSE Responsible AI Toolkit is at the heart of our efforts to implement AI ethically. These tools will allow employees to recognize, understand and mitigate the risks that come with AI use. The AI risk management process will enable the assessment of reputational, operational, technical, wellbeing, and authorization risks associated with new AI use cases at CSE.

Key goals for ensuring ethical implementation of AI include establishing procedures to ensure consistent and lawful use of AI , and publishing and communicating initiatives to ensure that our internal processes reflect CSE’s standards and reputation. Consistent with the CSE Act and the spirit of Government of Canada directives and guidelines, we will take great care in deploying AI in a manner that upholds fairness, respects the privacy of people in Canada and mitigates bias.

Developing and deploying AI for national security purposes presents some unique ethical challenges. We will need specialist expertise and processes to minimize bias and discrimination and to deploy it within clear guardrails. Initially, this will involve enhancing CSE’s risk management approaches and developing a learning and development curriculum to communicate clear guidelines and guardrails for developers and users. We will also rely on ethical AI principles, aligned with those of the Government of Canada’s principles and like-minded international allies, to guide us.

CSE Principles for AI Ethics

Human-centred: We will balance technological guidance with the application of human judgment and ensure our workforce is adaptive as this technology evolves.

Fair: We will identify and mitigate unwanted bias within technical design and in the data we use to avoid discrimination.

Accountable: We will ensure that humans are accountable for the results of AI systems and, therefore, have control over the outcomes. To support accountability, a human-in-the-loop who is a subject matter expert must validate AI -enabled outputs used to support decision-making.

Effective: We will use AI to improve mission outcomes while also recognizing its limitations and environmental impacts.

Lawful: We will use AI in a manner that respects human dignity and democratic values. Our AI use will fully comply with applicable legal authorities, policies and procedures, and CSE’s ethical standards.

Secure: We will develop and employ best practices for maximizing the reliability, security and accuracy of AI design, development and use. We will implement security best practices to build resilience, ensure the safety of users and beneficiaries and minimize potential for adversarial influence.

CSE will implement AI ethically by:

- building a Responsible AI Toolkit to champion responsible and secure AI development, building on risk management practices and a compliance-focused culture

- growing organizational awareness of AI and encouraging meaningful dialogue about the ethical use of AI at all levels of the organization

- developing and implementing organizational efforts to manage risks when developing, testing and using AI

- analyzing legal frameworks that govern AI development and use, including for countering adversarial threats

- championing responsible AI by publishing an unclassified AI strategy that demonstrates transparency and builds public trust

- developing governance and quality assurance capabilities to deploy AI models and supporting systems developed by Five Eyes and other key partners

- codifying awareness of AI ethical principles, risks, impacts and mitigation strategies into our business practices through internal communication strategies such as a learning and development campaign

Infrastructure

Once new AI technologies emerge, their impact depends on how organizations effectively establish the right conditions for their enterprise-wide acquisition, innovation and deployment. Even though CSE has long made use of AI and ML to deliver on its mission, the recent rapid evolution of AI technologies requires a re-examination of the infrastructure needed to take advantage of these advances. In particular, this includes supportive infrastructure, such as high-performance computing (HPC), data infrastructure and governance.

High performance computing (HPC)

AI is transforming the definition of HPC . CSE has always maintained its own HPC infrastructure, which allows it to perform its mission in the most secure manner possible. However, as AI moves into the mainstream, industry and governments are increasingly moving to cloud computing, reducing the need for on-premises supercomputers for their AI workloads. CSE must carefully balance its specialized needs with the benefits of secure cloud technologies, as it works to modernize its computing infrastructure for modern AI workloads.

CSE’s objective is to develop technical infrastructure that is secure, powerful, AI -ready and adaptable as technologies and policies evolve.

To do so, CSE must:

- determine the computational power and technical infrastructure required to use and deploy AI systems in the future

- incorporate a data strategy that supports CSE’s ability to pursue both foundational and applied research on AI system vulnerabilities and exploitation

- respect Canada’s commitments to Sustainable Development Goals

- consider the energy costs and potential environmental impacts of many generative AI systems when making business decisions

Data infrastructure and governance

To make effective use of AI / ML to achieve its mission, CSE must continue to be a world-class steward of data, making data available across the organization for processing by AI / ML technologies, while protecting the source and integrity of this data. As data stewards, protection of Canadian privacy is paramount and is guided by the CSE Act. We will need to explore a comprehensive range of options for storing and managing data within CSE’s legal frameworks designed to safeguard sensitive data.

This will involve adopting scalable, high-performance data storage infrastructure, updating our rigorous data governance and compliance practices, and securing access to data sources that are important to our mission.

Data quality is another important aspect to consider. CSE cannot simply acquire data from a source and believe that it is perfect. Examining the sources of the data will give some indication of reliability, but we have a responsibility to verify the data quality ourselves. To meet those objectives, CSE must put governance, processes and tooling in place to ensure that all data use is legal and ethical, and that data sources and compliance functions are available throughout the entire data processing pipeline. Analysts must always know whether the data they are examining has been generated by an algorithm and they must be able to track data augmentations to their source.

Data governance has also evolved significantly in the modern AI era. Governance must now incorporate flexibility, scalability and automation, while maintaining compliance, data quality and security. Data policies must cover not only data privacy and access control, but also ethical AI use, data provenance and bias mitigation in ML models. Data governance should balance controls with the need to enable innovation, ensuring both secure data use and agility in data-driven decision-making.

CSE’s “3+4+1” AI Strategy Framework: One unified AI effort

AI technologies will redefine CSE’s operational landscape. As we navigate these new capabilities, adopting a whole-of-organization approach will be essential to our success. AI will improve CSE’s efficiency and effectiveness across all levels of the organization. Scaling these capabilities will require ongoing effort and dedicated resources. We must integrate AI technologies while readying our workforce through learning and development initiatives.

CSE’s key goals related to one unified AI effort include:

- evolving AI policies and procedures to reflect the new human-/machine-based environment

- evolving CSE’s governance structures, including by exploring the creation of a central body that would coordinate the strategy’s implementation

- creating playbooks, training programs and other tools that will guide employees’ adoption of AI technologies

Next steps

Successfully implementing this strategy will require an organization-wide effort in developing and applying AI technologies responsibly. As AI continues to evolve at a rapid pace, CSE’s approach to AI governance will remain dynamic and adaptable.

Implementation of the strategy will require a dedicated, sustained effort across CSE. We will continue to ensure our use of these powerful technologies is held to rigorous legal and ethical standards, and that we are addressing AI's risks while maximizing its benefits. CSE will also continue to actively contribute to key partnerships and forums across the Five Eyes and the Government of Canada, aiming to find solutions to common challenges.

We will periodically re-evaluate and refine the AI strategy to ensure it adapts to the latest developments and best practices in AI while adhering to our mandate.

Annex 1: Key Terms

- Artificial intelligence (AI):

- AI is a subfield of computer science that develops intelligent computer programs that demonstrate behaviours normally associated with human intelligence (e.g. solve problems, learn from experience, understand language, interpret visual scenes).

- AI safety:

- AI safety is an interdisciplinary field focused on preventing accidents, misuse or other harmful consequences arising from AI systems.

- AI security:

- Safeguarding AI systems to ensure confidentiality, integrity, and availability of the system and its data, and defending these systems against attack by an adversary.

- Chatbot:

- A user interface that simulates an interactive conversation with an intelligent participant. Chatbots may be used for information retrieval, interactive querying, or as an interface to the other capabilities.

- Code generation:

- The process of generating source code snippets for use in software development based on natural language description of functionality, or suggestions stemming from incomplete code (“autocomplete”).Code generation is useful for automating small tasks for rapidly testing functionality.

- Data enrichment:

- The process of enhancing existing datasets with additional information or features to improve model performance or data quality, or to make it easier to link processes in a ML pipeline (e.g. generating labels, extracting features, imputing missing values).

- Data exploration and triage:

- The act of making large amounts of data understandable to a human analyst. Data triage involves analyzing and summarizing large datasets to reduce data volumes and identify patterns, anomalies and areas of interest. They use techniques such as dimension reduction, clustering, anomaly detection and visualization. These processes help humans understand data, make decisions and prioritize further analysis.

- Data science:

- An interdisciplinary academic field that uses statistics, scientific computing, scientific methods, processing, scientific visualization, algorithms and systems to extract or extrapolate knowledge and insights from potentially noisy, structured or unstructured data. Data science makes extensive use of ML to achieve these goals.

- Generative AI :

- A subset of ML that can generate new content based on large datasets fed into the model. Generative AI can create many forms of content including text, images, audio, video or software code.

- Human-in-the-loop:

- An AI system that requires human interaction before effecting an outcome. It reflects the need to provide human oversight of decisions with higher levels of impacts.

- Knowledge extraction and representation:

- These processes involve converting output between human- and machine-readable formats. Extraction typically refers to making something human-readable and representation refers to making it machine readable. A key feature of these tools is the preservation of truth in the underlying data.

- Large language models (LLMs):

- A type of generative AI trained on very large sets of language data that can create humanlike language on a given topic from user prompts. OpenAI’s ChatGPT and Google’s Gemini are well-known examples of ML .

- Machine learning (ML):

- A subset of AI that allows machines to learn how to complete a task from given data without a user needing to explicitly program a step-by-step solution. ML models can approach or exceed human performance for certain tasks, such as finding patterns in data.

- Media generation:

- This is similar to text generation, but for audio, video or image data. Generated media is described through natural language. Examples include text-to-image generation, video generation, and voice or image cloning. Media generation may include the ability to vary the style of the generated media.

- Model poisoning attacks:

- Occurs when an adversary intentionally manipulates an AI / ML model's parameters to cause it to behave in an undesirable way.

- Phishing:

- An attempt by a third party to solicit confidential information from an individual, group or organization by mimicking or spoofing a specific, usually well-known brand, usually for financial gain. Phishers attempt to trick users into disclosing personal data, such as credit card numbers, online banking credentials, and other sensitive information, which they may then use to commit fraudulent acts.

- Prompt injection:

- A type of cyber attack against ML . Attackers disguise malicious inputs as legitimate prompts, manipulating generative AI systems to execute the attackers’ intentions unknowingly.

- Social engineering:

- The practice of obtaining confidential information by manipulation of legitimate users. A social engineer will commonly use the telephone or internet to trick people into revealing sensitive information. For example, phishing is a type of social engineering.

- Text generation:

- The process of using a small amount of text to generate a larger amount of text. This may include the ability to vary the style of the generated text.

- Text summarization:

- The process of condensing large amounts of text into shorter summaries while retaining the key meaning of the original text. This may include explicit links to source material or an abstraction or paraphrase of the intended meaning.